This article evaluate and demonstrate capabilities of Fivetran and showcase improvements that can be brought in by implementing Fivetran over Nifi for overall ingestion process currently implemented at Regeneron for Data eco system.

Nifi Limitations

Nifi has certain limitations which are highlighted below which indicates the areas where it can be problem in near future w.r.t to growing data needs and streamlining the ingestion process for this growing data into overall Data eco system.

- No managed services.

- No alerting mechanism provided by tool itself.

- No hook for Airflow/MWAA scheduler to send the jobs to spark once ingestion is completed.

- Nifi scalability issue

Comparative Analysis

Comparative analysis between Nifi and Fivetran based on the high-level capabilities both the products have to offer.

Supported Formats:

| Nifi | Fivetran |

|---|---|

| NIFI can read different file format and convert it to csv. -Avro -ConvertRecordProcessor -getfile -json -NiFi | -Separated Value Files (CSV*, TSV, etc.) -JSON Text files delimited by new lines -JSON Arrays -Avro -Compressed — Zip, tar, GZ -Parquet -Excel |

Source Integration

| Nifi | Fivetran |

|---|---|

| Nifi can connect with following sources: – s3 – Google Cloud – Azure Blob CData JDBC Driver pair required for the following sources – Box – Dropbox – Google Drive – OneDrive – Sharepoint | Sync with below cloud-based storages: – S3 – Azure Blob – Google Cloud – Magic Folder: (Magic Folder connectors sync any supported file from your cloud folder as a table within a schema in your destination.) Sync supported through Magic Folder: – Box – Dropbox – Google Drive – OneDrive – Sharepoint |

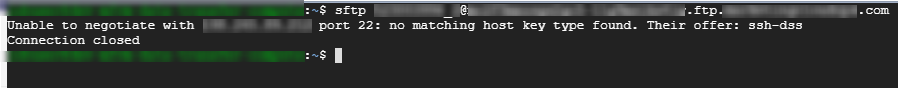

File Transfer Protocols

| Nifi | Fivetran |

|---|---|

| -FTP -SFTP | -FTP -FTPS -SFTP |

Supported Database Sources

| Nifi | Fivetran |

|---|---|

| -MongoDB -Postgres -MySql -Oracle -MS SQL -CData JDBC Driver for MariaDB | -MongoDB -MariaDB -MySQL -Oracle -PostgreSQL -SQL Server |

Logging

| Nifi | Fivetran |

|---|---|

| -nifi-bootstrap.log -nifi-user.log -nifi-app.log | -In dashboard -External Logging service -In your destination using Fivetran -Log Connector |

Transformations

| Nifi | Fivetran |

|---|---|

| -Jolt (JoltTransformJSON Processor) -XSLT (TransformXml Processor) -Data Transformation using Scripts (ExecuteScript Processor) | -Basic SQL transformations -dbt transformations dbt is an open-source software that enables you to perform sophisticated data transformations in your destination using simple SQL statements. With dbt, you can: – Write and test SQL transformations – Use version control with your transformations – Create and share documentation about your dbt transformations – View data lineage graphs |

Alerting

| Nifi | Fivetran |

|---|---|

| You can use the Monitor Activity processor to alert on changes in flow activity by routing alert to Put Email processor | Only present on dashboard but if sync fails it can send email notification provided its enabled. NOTE: Tasks describe a problem that keeps Fivetran from syncing your data. Warnings describe a problem that you may need to fix, but that does not keep Fivetran from syncing your data. |

Listener

| Nifi | Fivetran |

|---|---|

| -Maintain state for incremental load using state object -Event-based is supported -Scheduling also supported | -Maintain state for incremental load using state object -Event-based is supported -Scheduling also supported |

Scalability

| Nifi | Fivetran |

|---|---|

| Possible but difficult | Possible but difficult |

Trigger for Auto-Start Transformation Job

| Nifi | Fivetran |

|---|---|

| No trigger, must rely on scheduling times. | Integration with Apache Airflow is Supported. Fivetran’s syncs enable the ability to trigger data transformations from Fivetran syncs. |

Destination / Warehouses

| Nifi | Fivetran |

|---|---|

| -S3 -Postgres -MongoDB -MySql -Oracle -MS SQL -CData JDBC Driver for MariaDB | –Azure Synapse -BigQuery -Databricks -MySQL BETA -Panoply –Periscope -PostgreSQL -Redshift -Snowflake –SQL Server |

Account management

| Nifi | Fivetran |

|---|---|

| -client certificates -username/password -Apache Knox -OpenId Connect | IAM / User Authentication Possible -Azure AD (BETA) -Google Workspace (BETA) -Okta -OneLogin -PingOnes |

Version Control

| Nifi | Fivetran |

|---|---|

| GitHub | GitHub with account having permissions for following GitHub scopes: -repo -read:org -admin:org_hook -admin:repo_hook |

Configuration REST API

| Nifi | Fivetran |

|---|---|

| The configuration API can manage -Access -Controller -Controller Services -Reporting Tasks -Flow -Process Groups -Processors -Connections -FlowFile Queues -Remote Process Groups -Provenance | This feature is available only for Standard, Enterprise, and Business Critical accounts –User Management API -Group Management API -Destination Management API -Connector Management API -Certificate Management API |

Functions\Templates

| Nifi | Fivetran |

|---|---|

| Yes | Can write the custom for data source or a private API that fivetran don’t support, you can develop a serverless ELT data pipeline using our Function connectors. |

Language Supported

| Nifi | Fivetran |

|---|---|

| -Python -Java | -Python -Java -GO -Node.JS |

Streaming